“4.3: Parallel and Distributed Computing” Everything You Need to Know

“Parallel and Distributed Computing” Everything You Need to Know

In today’s rapidly evolving digital world, the ability to process vast amounts of data quickly and efficiently is more critical than ever. Parallel and Distributed Computing are two key paradigms that enable systems to handle complex, data-intensive tasks by harnessing the power of multiple processors and interconnected systems. From scientific research and financial modeling to artificial intelligence and cloud services, these techniques form the backbone of modern computing. In this comprehensive guide, we’ll explore what parallel and distributed computing are, trace their historical evolution, break down their core components and methods, and examine their wide-ranging applications and benefits. Whether you’re an IT professional, researcher, or simply curious about the technology that powers our digital age, this article will equip you with all the insights you need to master parallel and distributed computing.

Introduction: Supercharging Computation in the Digital Age

Imagine a single processor trying to solve complex problems one step at a time—a task that could take days or even months. Now, envision a network of thousands of processors working together simultaneously, slicing through enormous datasets in minutes. This is the transformative power of parallel and distributed computing.

Did you know that some of the world’s most powerful supercomputers use these techniques to perform quadrillions of calculations per second? In today’s interconnected world, where applications from weather forecasting to online gaming rely on real-time data processing, parallel and distributed computing are more than just buzzwords—they are the engine driving innovation and efficiency.

In this post, we will cover:

- A clear and concise definition of Parallel and Distributed Computing.

- Historical milestones that have shaped their evolution.

- An in-depth exploration of key components, architectures, and techniques.

- Real-world examples and case studies demonstrating their practical applications.

- The significance, benefits, and broad impact on society, industry, and science.

- Common misconceptions and FAQs to clarify essential concepts.

- Modern trends and emerging practices that are shaping the future of these computing paradigms.

Join us as we dive into the world of parallel and distributed computing and discover how these powerful methodologies are revolutionizing our ability to process data and solve complex problems.

What Are Parallel and Distributed Computing? A Clear Definition

Parallel and Distributed Computing are approaches used to perform multiple computations simultaneously to solve complex problems more efficiently.

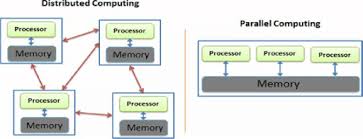

Parallel Computing:

In parallel computing, tasks are divided into smaller sub-tasks that are executed simultaneously on multiple processors or cores within a single machine. The main goal is to speed up computation by performing many operations at once.Distributed Computing:

Distributed computing involves a network of independent computers (nodes) that work together to solve a problem. These computers communicate over a network and coordinate their actions, making it possible to process data on a scale that exceeds the capabilities of any single machine.

Essential Characteristics

Concurrency:

Both paradigms enable multiple computations to occur at the same time, dramatically reducing overall processing time.Scalability:

They are designed to handle increasing amounts of data and computation by adding more processors (parallel) or more nodes (distributed) to the system.Communication and Coordination:

In distributed systems, nodes must communicate effectively using protocols to ensure that data is shared and tasks are synchronized. In parallel systems, processors often share memory or communicate through high-speed interconnects.Fault Tolerance:

Distributed systems often include redundancy and recovery mechanisms to ensure that the failure of one node does not compromise the entire system.Resource Optimization:

Both approaches aim to maximize the use of available computing resources, thereby enhancing performance and efficiency.

These fundamental properties allow parallel and distributed computing to solve problems that are infeasible for single-processor systems, making them essential for modern applications.

Historical and Contextual Background

Early Beginnings in Computing

The Advent of Multiprocessing

- Early Computers:

In the early days of computing during the 1940s and 1950s, computers were large, expensive machines designed for specific tasks. These machines operated sequentially, processing one instruction at a time. However, the need for faster computation soon led to the idea of multiprocessing, where more than one processor could work on a task simultaneously.

Pioneering Efforts

- MIT and the Atlas Computer:

The development of the Atlas computer at MIT in the early 1960s was one of the first attempts to incorporate parallel processing techniques. Although primitive by today’s standards, these early systems laid the groundwork for more sophisticated parallel architectures.

Evolution of Parallel Computing

Supercomputing Era:

In the 1970s and 1980s, supercomputers began to adopt parallel processing to tackle scientific and engineering problems. Machines like the Connection Machine and Cray supercomputers used multiple processors to achieve unprecedented speeds in computation.Shift to Multi-Core Processors:

By the 2000s, advancements in semiconductor technology led to the development of multi-core processors in personal computers. This shift made parallel computing accessible not only to high-end research institutions but also to everyday users.

The Rise of Distributed Computing

ARPANET and Early Networking:

The evolution of distributed computing is closely tied to the development of computer networks. ARPANET, developed in the late 1960s, demonstrated that computers could communicate and work together over long distances, forming the basis of modern distributed systems.Client-Server Models and the Internet:

In the 1980s and 1990s, the client-server model emerged, allowing multiple client machines to connect to a central server. This model evolved with the growth of the Internet, leading to distributed systems that support e-commerce, online gaming, and cloud computing.Modern Distributed Systems:

Today, distributed computing underpins cloud services, big data analytics, and content delivery networks (CDNs). These systems leverage the power of thousands of interconnected nodes to process data and provide services on a global scale.

Notable Milestones

The Development of MPI (Message Passing Interface):

In the 1990s, MPI became the standard for communication in distributed computing systems, enabling efficient parallel processing across multiple nodes.The Emergence of MapReduce:

Google’s MapReduce framework, introduced in the mid-2000s, revolutionized large-scale data processing by simplifying the development of distributed algorithms.Cloud Computing Platforms:

Platforms such as AWS, Microsoft Azure, and Google Cloud have democratized access to distributed computing resources, enabling organizations of all sizes to leverage massive computing power on demand.

These historical developments highlight the dynamic evolution of parallel and distributed computing, from early experimental systems to the advanced, interconnected infrastructures that power our modern digital economy.

In-Depth Exploration: Key Components and Techniques in Parallel and Distributed Computing

Developing and optimizing parallel and distributed computing systems requires a deep understanding of their components, architectures, and the techniques used to coordinate multiple processing units. This section breaks down the key elements that make these systems work.

1. Parallel Computing Components and Techniques

Multi-Core Processors

Definition:

Multi-core processors contain multiple processing units (cores) on a single chip, allowing them to execute multiple tasks concurrently.Benefits:

Increased computational power, improved performance for multitasking, and energy efficiency through shared resources.

Shared Memory vs. Distributed Memory

Shared Memory Systems:

In these systems, multiple processors access a common memory space. Communication between processors is fast because they share data directly, but this can lead to contention issues.Distributed Memory Systems:

Each processor has its own private memory, and processors communicate by passing messages. This model scales well for large systems but requires more complex communication protocols.Hybrid Approaches:

Many modern systems use a hybrid approach, combining shared and distributed memory to balance performance and scalability.

Parallel Algorithms

Techniques:

Algorithms designed for parallel execution are divided into independent tasks that can run simultaneously. Common techniques include data parallelism, task parallelism, and pipeline parallelism.Examples:

- Parallel Sorting: Algorithms like parallel quicksort and mergesort.

- Matrix Multiplication: Dividing the computation across multiple cores to speed up calculations.

Synchronization and Communication

Mechanisms:

Techniques such as locks, semaphores, and barriers are used to coordinate the execution of parallel tasks, ensuring data consistency and preventing race conditions.Example:

In a shared memory system, a lock might be used to prevent two threads from modifying the same variable simultaneously.

2. Distributed Computing Components and Techniques

Networked Systems

Definition:

Distributed systems consist of multiple independent computers (nodes) connected by a network. These nodes work together to achieve a common goal.Key Components:

- Nodes/Servers: Individual computers that perform specific tasks.

- Interconnects: Communication links, such as LANs, WANs, and the Internet, that connect nodes.

- Middleware: Software that facilitates communication and data exchange between nodes.

Distributed Algorithms

Techniques:

Distributed algorithms are designed to function across multiple nodes, handling tasks such as data replication, consensus building, and fault tolerance.Examples:

- MapReduce: A programming model for processing large data sets with a distributed algorithm on a cluster.

- Consensus Algorithms: Protocols like Paxos and Raft that ensure distributed systems agree on a single data value.

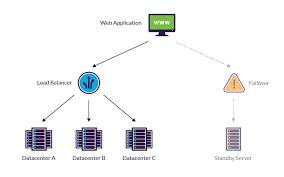

Fault Tolerance in Distributed Systems

Strategies:

Redundancy, replication, and failover mechanisms are used to ensure that the system continues to operate even if some nodes fail.Example:

Cloud services often replicate data across multiple data centers to ensure that a failure in one location does not disrupt service.

Communication Protocols

Definition:

Protocols such as TCP/IP, HTTP, and RPC (Remote Procedure Call) define the rules for data exchange between nodes in a distributed system.Importance:

These protocols ensure that data is transmitted reliably and efficiently across the network, even when nodes are geographically dispersed.

3. Best Practices for Designing Parallel and Distributed Systems

Scalability and Load Balancing

Scalability:

Design systems to handle increasing workloads by adding more processors (parallel) or more nodes (distributed) without significant performance degradation.Load Balancing:

Distribute work evenly across processors or nodes to prevent bottlenecks and maximize resource utilization.

Fault Tolerance and Resilience

Redundancy:

Implement redundant components to ensure that the system remains operational even if some parts fail.Monitoring and Recovery:

Use real-time monitoring and automated recovery processes to quickly detect and respond to failures.

Efficient Communication

Minimize Overhead:

Optimize communication protocols to reduce latency and bandwidth usage, especially in distributed systems.Data Locality:

Wherever possible, design systems to process data locally, reducing the need for extensive data transfer across nodes.

Security Considerations

Encryption:

Secure data in transit and at rest using strong encryption protocols.Authentication and Authorization:

Ensure that only authorized nodes and users can access system resources, protecting against unauthorized access and data breaches.

4. Real-World Examples and Case Studies

Case Study: Supercomputing and Scientific Research

Scenario:

Supercomputers used in climate modeling, genomics, and astrophysics rely on parallel computing to process enormous amounts of data quickly.Implementation:

Systems such as the Cray supercomputers utilize thousands of processors working in parallel to perform complex calculations. Researchers employ parallel algorithms to simulate weather patterns, analyze genetic sequences, and explore cosmic phenomena.Outcome:

The ability to process large-scale simulations in a fraction of the time has led to breakthroughs in scientific research, improving our understanding of natural phenomena.

Case Study: Cloud Services and Distributed Computing

Scenario:

Major cloud service providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud use distributed computing to deliver scalable, reliable services to millions of users worldwide.Implementation:

These platforms rely on distributed architectures, load balancing, and fault-tolerant mechanisms to manage data centers spread across the globe. Services like AWS EC2 and Google Compute Engine enable users to run applications that scale dynamically based on demand.Outcome:

The efficiency and resilience of these cloud services power everything from streaming platforms to enterprise applications, demonstrating the practical benefits of distributed computing.

Case Study: Online Retail and E-Commerce

Scenario:

An online retailer must process thousands of transactions per second while ensuring high availability and responsiveness.Implementation:

The retailer uses a combination of parallel computing (to process orders concurrently) and distributed systems (to manage data across multiple servers and data centers). Techniques such as sharding, load balancing, and replication are employed to maintain performance during peak times.Outcome:

Enhanced performance, reduced downtime, and a seamless shopping experience contribute to increased customer satisfaction and revenue growth.

Case Study: Telecommunications and 5G Networks

Scenario:

The rollout of 5G networks demands rapid data processing and real-time communication across billions of devices.Implementation:

Telecommunications companies use parallel and distributed computing to manage network traffic, optimize bandwidth usage, and ensure low latency in data transmission.Outcome:

Improved network performance supports new applications such as autonomous vehicles, remote surgery, and immersive virtual reality experiences, showcasing the transformative impact of these technologies.

The Importance, Applications, and Benefits of Parallel and Distributed Computing

Understanding Parallel and Distributed Computing is essential for harnessing the full potential of modern technology. Here’s why these paradigms matter:

Enhanced Performance and Speed

Rapid Processing:

By dividing tasks among multiple processors or nodes, these systems can complete complex computations much faster than a single processor could.Scalability:

Both parallel and distributed systems are designed to scale, ensuring that as data volumes and computational demands grow, performance remains robust.

Improved Efficiency and Resource Utilization

Optimal Use of Resources:

Parallel computing maximizes the use of multi-core processors, while distributed computing leverages the power of networks to share resources effectively.Cost Savings:

Increased efficiency can lead to significant cost reductions, particularly in large-scale systems such as data centers and cloud platforms.

Robustness and Fault Tolerance

Resilient Systems:

Distributed architectures provide redundancy and fault tolerance, ensuring that the failure of a single component does not cripple the entire system.Continuous Availability:

High-availability systems built on parallel and distributed principles minimize downtime, which is critical for mission-critical applications in finance, healthcare, and public services.

Broad Applicability Across Industries

Scientific Research:

Complex simulations and data analyses in fields like climate science, genomics, and astrophysics rely on these computing paradigms.Business and Finance:

Real-time analytics, algorithmic trading, and enterprise resource planning systems benefit greatly from parallel and distributed processing.Everyday Applications:

From streaming services and social media to online gaming and smart city infrastructure, these technologies underpin the digital experiences that shape our daily lives.

Driving Innovation and Future Growth

Enabling New Technologies:

Advances in AI, machine learning, and the Internet of Things (IoT) are made possible by parallel and distributed computing, which provide the necessary computational power and scalability.Supporting Global Connectivity:

The development of robust, distributed networks drives global communication, collaboration, and innovation, shaping a more connected and efficient world.

Addressing Common Misconceptions and FAQs

Despite their critical role in modern technology, several misconceptions about Parallel and Distributed Computing persist. Let’s clear up these misunderstandings and answer some frequently asked questions.

Common Misconceptions

Misconception 1: “Parallel and distributed computing are only relevant for high-performance computing.”

Reality: While these paradigms are crucial for supercomputers and data centers, they also underpin everyday applications such as web browsing, streaming, and mobile computing.Misconception 2: “They are too complex for most applications.”

Reality: Advances in software frameworks and cloud platforms have made parallel and distributed computing more accessible, enabling businesses of all sizes to leverage these technologies.Misconception 3: “Scalability means simply adding more machines.”

Reality: True scalability involves optimizing algorithms, load balancing, and efficient resource management—not just increasing the number of processors or nodes.

Frequently Asked Questions (FAQs)

Q1: What is the difference between parallel and distributed computing?

A1: Parallel computing involves multiple processors working simultaneously within a single system to execute tasks, while distributed computing uses a network of independent computers to perform computations collectively.

Q2: How does algorithm design change for parallel and distributed systems?

A2: Algorithms for these systems must be designed to minimize communication overhead, manage data dependencies, and handle synchronization between multiple processing units or nodes.

Q3: What are some common applications of parallel and distributed computing?

A3: They are widely used in scientific simulations, big data analytics, cloud computing, real-time processing (e.g., 5G networks), and many other fields where large-scale computation is required.

Q4: Can these paradigms improve everyday applications?

A4: Absolutely. Many everyday services, such as online streaming, social media, and e-commerce platforms, rely on parallel and distributed processing to deliver fast, responsive experiences to users.

Modern Relevance and Current Trends in Parallel and Distributed Computing

As technology advances, the importance and complexity of parallel and distributed computing continue to grow. Here are some modern trends and emerging practices shaping the future of these computing paradigms:

Cloud Computing and Virtualization

On-Demand Scalability:

Cloud platforms enable organizations to dynamically scale their computing resources based on demand, leveraging distributed computing to manage large workloads efficiently.Containerization:

Technologies like Docker and Kubernetes have revolutionized the deployment of distributed applications, allowing for rapid, consistent scaling and orchestration of services.

The Internet of Things (IoT) and Edge Computing

Decentralized Processing:

IoT devices generate massive amounts of data that must be processed locally or on the “edge” of the network to reduce latency and improve responsiveness.Integration of Edge and Cloud:

Hybrid architectures combine edge computing with centralized cloud services, ensuring that data is processed efficiently and securely across distributed networks.

Advances in Artificial Intelligence and Machine Learning

Parallel Algorithms in AI:

Machine learning models, especially deep neural networks, require parallel processing for training on large datasets. Techniques such as data parallelism and model parallelism have become standard.Distributed Learning:

Distributed computing frameworks are enabling large-scale AI training across multiple nodes, reducing training time and improving model accuracy.

Enhancements in Network Infrastructure

5G and Beyond:

The advent of 5G networks is revolutionizing data transfer speeds and connectivity, supporting more robust distributed systems and real-time processing.High-Speed Interconnects:

Advances in networking hardware, such as faster switches and fiber-optic technology, are reducing latency and improving the performance of parallel systems.

Security and Fault Tolerance

Robust and Resilient Systems:

Ensuring fault tolerance and robust security in parallel and distributed systems is more critical than ever. New techniques in encryption, distributed consensus, and self-healing architectures are being developed to enhance system reliability.Zero Trust Architectures:

As distributed systems become more prevalent, adopting zero trust security models ensures that every component is continuously verified, protecting against breaches and ensuring data integrity.

Conclusion: The Future of Parallel and Distributed Computing

Parallel and Distributed Computing are not just technical paradigms; they are transformative forces driving the digital revolution. By leveraging the power of multiple processors and interconnected networks, these approaches enable rapid data processing, high availability, and scalable performance across a wide range of applications. As we continue to generate and rely on vast amounts of data, the importance of these computing paradigms will only grow.

Key Takeaways

Essential for Modern Technology:

Parallel and distributed computing form the backbone of everything from cloud services and AI to real-time analytics and global communication.Enhanced Performance and Efficiency:

These paradigms allow for significant speed improvements and better resource utilization, enabling systems to scale and handle increasingly complex tasks.Broad Impact:

Their applications span industries—from scientific research and finance to healthcare and smart cities—driving innovation and improving quality of life.Continuous Evolution:

Advances in cloud computing, IoT, AI, and network infrastructure ensure that parallel and distributed computing will continue to evolve and shape the future of technology.

Call-to-Action

Reflect on how parallel and distributed computing impact your work and daily life—whether you’re using a smartphone, streaming a video, or conducting cutting-edge research. Understanding these paradigms can empower you to harness their potential, optimize your systems, and innovate more effectively. We invite you to share your experiences, ask questions, and join our conversation about the future of computing. If you found this guide helpful, please share it with colleagues, friends, and anyone eager to explore the transformative power of parallel and distributed computing.

For more insights into technology, digital transformation, and advanced computing, visit reputable sources such as Harvard Business Review and Forbes. Embrace the future of parallel and distributed computing and drive a smarter, faster, and more connected world!

Additional Resources and Further Reading

For those who wish to delve deeper into Parallel and Distributed Computing, here are some valuable resources:

Books:

- “Parallel Programming in C with MPI and OpenMP” by Quinn

- “Distributed Systems: Principles and Paradigms” by Andrew S. Tanenbaum and Maarten van Steen

- “High Performance Computing” by Charles Severance

Online Courses and Workshops:

- Coursera’s Parallel, Concurrent, and Distributed Programming in Java

- edX’s High Performance Computing Courses

- Udacity’s Nanodegree Programs on Cloud Computing and Distributed Systems

Websites and Articles:

Communities and Forums:

- Engage with experts on Reddit’s r/parallelprogramming and r/distributedsystems for practical advice and discussions.

- Join LinkedIn groups dedicated to cloud computing, high-performance computing, and distributed systems to share insights and learn from professionals.

Final Thoughts

Parallel and distributed computing are at the core of today’s digital innovations. They empower organizations and individuals to process data faster, scale operations seamlessly, and build systems that are both robust and resilient. By understanding these paradigms, you not only gain insights into the current state of technology but also equip yourself to contribute to its future evolution.

Thank you for reading this comprehensive guide on Parallel and Distributed Computing. We look forward to your feedback, questions, and success stories—please leave your comments below, share this post with your network, and join our ongoing conversation about shaping the future of computation.

Happy computing, and here’s to a future of endless innovation powered by parallel and distributed technologies!

Recent Posts

- Geometry Regents Score Calculator 2026 | Predict Your Grade

- Algebra 2 Regents Score Calculator (2025 NY Exam Tool)

- Algebra 1 Regents Score Calculator (NY Regents Estimator)

- PreACT® Score Calculator (2025 Raw-to-Scaled Estimator)

- ACT® Score Calculator (2025 Raw-to-Scaled Score Tool)

- PSAT® Score Calculator (2025 Digital Exam Estimator Tool)

- AP® Music Theory Score Calculator (2025 Exam Estimator)

- AP® Art History Score Calculator (2025 Exam Estimator)

- AP® Spanish Literature Score Calculator (2025 Exam Tool)

- AP® Spanish Language Score Calculator (2025 Exam Tool)

- AP® Latin Score Calculator (2025 Exam Scoring Tool)

- AP® German Language Score Calculator (2025 Exam Tool)

- AP® French Language Score Calculator (2025 Exam Tool)

- AP® English Literature Score Calculator (2025 Exam Tool)

- AP® English Language Score Calculator (2025 Exam Tool)

Choose Topic

- ACT (17)

- AP (20)

- AP Art and Design (5)

- AP Chemistry (1)

- AP Physics 1 (1)

- AP Psychology (2025) (1)

- AP Score Calculators (35)

- AQA (5)

- Artificial intelligence (AI) (2)

- Banking and Finance (6)

- Biology (13)

- Business Ideas (68)

- Calculator (73)

- ChatGPT (1)

- Chemistry (3)

- Colleges Rankings (48)

- Computer Science (4)

- Conversion Tools (137)

- Cosmetic Procedures (50)

- Cryptocurrency (49)

- Digital SAT (3)

- Disease (393)

- Edexcel (4)

- English (1)

- Environmental Science (2)

- Etiology (7)

- Exam Updates (7)

- Finance (129)

- Fitness & Wellness (164)

- Free Learning Resources (208)

- GCSE (1)

- General Guides (40)

- Health (107)

- History and Social Sciences (152)

- IB (9)

- IGCSE (1)

- Image Converters (3)

- IMF (10)

- Math (44)

- Mental Health (58)

- News (9)

- OCR (1)

- Past Papers (450)

- Physics (5)

- Research Study (6)

- SAT (39)

- Schools (3)

- Sciences (1)

- Short Notes (5)

- Study Guides (28)

- Syllabus (19)

- Tools (1)

- Tutoring (1)

- What is? (312)

Recent Comments

“4.3: Parallel and Distributed Computing” Everything You Need to Know

“4.2: Fault Tolerance” Everything You Need to Know

“4.1: The Internet” Everything You Need to Know

“Unit 4 Overview: Computer Systems and Networks” Everything You Need to Know

Williams Syndrome - Everything you need to know

Kidney Stones Causes - Everything you need to know

Progressive Supranuclear Palsy (PSP) - Everything you need to know