Mastering Recursion: The Key to Simplifying Complex Problems

Recursion is one of the most powerful tools in programming, allowing you to break down complex problems into simpler, smaller subproblems. This blog post dives deep into the fundamentals of recursion, explores its advantages over loops, and demonstrates its applications with practical examples. If you’re preparing for the AP Computer Science A exam or looking to strengthen your programming skills, this is your ultimate guide to understanding and mastering recursion.

What Is Recursion?

In programming, recursion is a technique where a function calls itself to solve a problem. This self-referential approach is particularly useful for problems that can be divided into smaller, repetitive tasks.

Anatomy of a Recursive Method

A recursive method consists of two essential components:

- Base Case: The condition where the recursion stops. It prevents infinite loops and provides a solution to the simplest instance of the problem.

- Recursive Case: The part where the method calls itself with modified parameters, gradually reducing the problem until the base case is reached.

Here’s the basic structure of a recursive method:

public static void recursiveMethod() {

if (baseCaseCondition) { // Base case

// Perform base case logic

} else {

// Perform some action

recursiveMethod(); // Recursive call

}

}

Why Use Recursion?

While loops can often achieve the same results as recursion, recursion offers several unique advantages:

- Code Simplification: Recursive solutions are often more concise and easier to understand, especially for problems like tree traversal or mathematical sequences.

- Natural Fit for Divide-and-Conquer: Recursive methods are ideal for problems that can be divided into smaller, similar subproblems (e.g., sorting algorithms, searching algorithms).

- Elegance: Recursion provides a cleaner and more intuitive way to implement algorithms compared to iterative loops.

Base Case: The Foundation of Recursion

The base case is the most crucial part of any recursive function. It defines when the recursion should stop. Without a well-defined base case, the function may result in infinite recursion, causing a StackOverflowError.

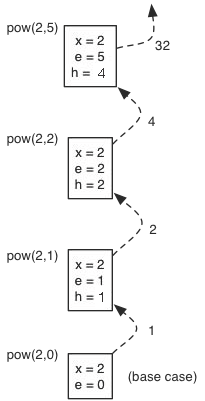

Recursive Calls and the Call Stack

When a recursive function is called, each call is stored in a call stack until the base case is reached. The stack keeps track of each function call’s state, allowing the program to return to the previous call with the correct parameters.

Visualizing the Call Stack

Consider the recursive factorial function:

- Call Stack (Descending): Each recursive call adds a new layer to the stack.

- Return Stack (Ascending): Once the base case is reached, the results are returned layer by layer.

This mechanism ensures that all intermediate calculations are preserved.

Recursion vs. Loops: A Comparison

While recursion and iteration (loops) can achieve the same results, each has its strengths and weaknesses.

| Feature | Recursion | Loops |

|---|---|---|

| Code Clarity | Cleaner and more intuitive | May become verbose for complex tasks |

| Efficiency | Higher memory usage (due to call stack) | More efficient for large datasets |

| Use Case | Best for divide-and-conquer problems | Best for straightforward iterations |

Example: Multiplication Using Repeated Addition

Iterative Version

public static int multiply(int a, int b) {

int sum = 0;

for (int i = 0; i < b; i++) {

sum += a;

}

return sum;

}

Recursive Version

public static int multiply(int a, int b) {

if (b == 0) { // Base case

return 0;

}

return a + multiply(a, b - 1); // Recursive case

}

Practical Applications of Recursion

1. Array Traversal

Recursive Array Traversal

public static void traverseArray(int[] array, int index) {

if (index == array.length) { // Base case

return;

}

System.out.println(array[index]);

traverseArray(array, index + 1); // Recursive call

}

Iterative Array Traversal

public static void traverseArray(int[] array) {

for (int i = 0; i < array.length; i++) {

System.out.println(array[i]);

}

}

2. Binary Search

Binary search is a classic example of recursion, efficiently searching for an element in a sorted array by dividing the search space in half.

Recursive Binary Search

public int binarySearch(int[] array, int target, int low, int high) {

if (low > high) { // Base case

return -1;

}

int mid = (low + high) / 2;

if (array[mid] == target) {

return mid;

} else if (target < array[mid]) {

return binarySearch(array, target, low, mid - 1);

} else {

return binarySearch(array, target, mid + 1, high);

}

}

3. Merge Sort

Merge sort is a divide-and-conquer algorithm that uses recursion to sort an array.

public void mergeSort(int[] array) {

if (array.length > 1) { // Base case

int mid = array.length / 2;

int[] left = Arrays.copyOfRange(array, 0, mid);

int[] right = Arrays.copyOfRange(array, mid, array.length);

mergeSort(left);

mergeSort(right);

merge(array, left, right);

}

}

public void merge(int[] result, int[] left, int[] right) {

int i = 0, j = 0, k = 0;

while (i < left.length && j < right.length) {

if (left[i] < right[j]) {

result[k++] = left[i++];

} else {

result[k++] = right[j++];

}

}

while (i < left.length) result[k++] = left[i++];

while (j < right.length) result[k++] = right[j++];

}

Key Takeaways

- Base Case is Crucial: Always define a clear base case to prevent infinite recursion.

- Understand the Call Stack: Visualizing the call stack helps trace recursive calls effectively.

- Know When to Use Recursion: While recursion simplifies complex problems, it can be less efficient than iteration.

Conclusion

Recursion is a cornerstone of computer science, offering an elegant solution to many problems. While it sacrifices some efficiency for simplicity, its power lies in its ability to handle complex, repetitive tasks with minimal code. Whether you’re traversing data structures, implementing search algorithms, or solving mathematical problems, mastering recursion is essential for every programmer.

Here is a detailed list of 50 FAQs about recursion, complete with well-explained answers, covering basic to advanced aspects of recursion.

1. What is Recursion in Programming?

Recursion is a process where a function calls itself to solve a smaller instance of the same problem until it reaches a base case.

2. What Are the Key Components of a Recursive Function?

- Base Case: The condition where recursion stops.

- Recursive Case: The part of the function that reduces the problem.

3. What is a Base Case in Recursion?

A base case is a condition that ends the recursion to prevent infinite loops. For example, in a factorial function, the base case is when n == 0.

4. How Does the Call Stack Work in Recursion?

Each recursive call adds a new frame to the stack, storing the function’s execution state. When the base case is reached, the stack unwinds as the calls return.

5. What is Tail Recursion?

Tail recursion is when the recursive call is the last operation in the function, allowing optimization by reusing the current stack frame.

def factorial_tail(n, acc=1):

if n == 0:

return acc

return factorial_tail(n-1, acc*n)

6. What is the Difference Between Recursion and Iteration?

- Recursion: Uses the call stack and is often more elegant for hierarchical problems.

- Iteration: Uses loops and is more memory-efficient.

7. What is Mutual Recursion?

Mutual recursion occurs when two or more functions call each other.

def is_even(n):

if n == 0:

return True

return is_odd(n-1)

def is_odd(n):

if n == 0:

return False

return is_even(n-1)

8. What Are Common Use Cases of Recursion?

- Traversing trees or graphs.

- Implementing algorithms like quicksort and merge sort.

- Solving puzzles like the Tower of Hanoi.

9. What is a Recursive Algorithm?

A recursive algorithm solves a problem by solving smaller instances of itself.

10. How to Prevent Stack Overflow in Recursion?

- Define a clear base case.

- Optimize using tail recursion.

- Use memoization or switch to iteration for large inputs.

11. What is the Fibonacci Series Using Recursion?

def fibonacci(n):

if n <= 1:

return n

return fibonacci(n-1) + fibonacci(n-2)

12. What is Recursion Limit in Python?

Python has a default recursion limit of 1000. You can adjust it using:

import sys

sys.setrecursionlimit(2000)

13. What is Backtracking in Recursion?

Backtracking is a recursive method to solve problems by exploring all possibilities and retracting when an invalid state is encountered.

14. What Are the Advantages of Recursion?

- Simplifies code for hierarchical and divide-and-conquer problems.

- Eliminates the need for explicit stack management.

15. What Are the Disadvantages of Recursion?

- Higher memory usage due to the call stack.

- Slower for deep recursions compared to iteration.

16. What is Divide-and-Conquer Using Recursion?

Divide-and-conquer splits a problem into smaller subproblems, solves them recursively, and combines their results.

17. What is Memoization in Recursion?

Memoization stores results of expensive recursive calls to avoid redundant computations.

18. How to Debug Recursive Functions?

- Add print statements to trace recursive calls.

- Use debuggers to step through each call.

19. What is Infinite Recursion?

Infinite recursion occurs when a recursive function lacks a valid base case.

20. How Does Recursion Work in Tree Traversal?

Tree traversal methods like inorder, preorder, and postorder are implemented recursively.

21. What is the Time Complexity of Recursive Algorithms?

Depends on the number of recursive calls and operations per call. E.g., Fibonacci without memoization is O(2^n).

22. What is Factorial Using Recursion?

def factorial(n):

if n == 0:

return 1

return n * factorial(n-1)

23. What Are Common Recursion Problems?

- Tower of Hanoi.

- Permutations and combinations.

- Solving mazes.

24. What is Recursion Depth?

The depth of recursion refers to the number of recursive calls in the call stack at a time.

25. Can Recursion Replace Iteration?

Recursion can replace iteration but might be less efficient for non-hierarchical problems.

26. What is Indirect Recursion?

Indirect recursion occurs when function A calls function B, which then calls function A.

27. What Are Tail Recursive Functions?

Functions where the recursive call is the final operation, allowing stack frame optimization.

28. How to Convert Recursive Code to Iterative Code?

Use an explicit stack or queue to mimic the call stack.

29. What is Recursion in Sorting Algorithms?

Algorithms like quicksort and merge sort use recursion to divide and conquer arrays.

30. What is Recursion in Graph Traversal?

Depth-first search (DFS) uses recursion to traverse graph nodes.

31. What is the Role of Recursion in Dynamic Programming?

Dynamic programming combines recursion with memoization to solve problems with overlapping subproblems.

32. How to Handle Large Inputs in Recursive Functions?

- Use tail recursion where supported.

- Optimize with memoization.

- Consider iterative solutions.

33. What Are the Differences Between Tail and Non-Tail Recursion?

- Tail Recursion: No operations after the recursive call.

- Non-Tail Recursion: Operations are performed after the recursive call.

34. What is the Tower of Hanoi Problem?

A classic recursion problem involving moving disks between rods with constraints.

35. What Are Recursion Trees?

A recursion tree visually represents recursive calls and their results.

36. What is Base Case Validation?

Ensuring the base case is reachable and logically terminates the recursion.

37. How to Optimize Recursive Functions?

- Use dynamic programming techniques.

- Implement tail recursion if the language supports it.

- Limit recursion depth where possible.

38. What is the Difference Between Structural and Functional Recursion?

- Structural Recursion: Follows the structure of data (e.g., trees).\n

- Functional Recursion: Breaks problems into functional components.

39. What Are Recursive Data Structures?

Data structures like linked lists and trees are naturally recursive, as their definition references themselves.

40. How to Prevent Infinite Recursion?

Ensure the base case is correct and reachable for all input scenarios.

41. What is Recursion in Functional Programming?

Functional programming often uses recursion instead of loops for iterations.

42. What is the Role of Stack Frames in Recursion?

Each recursive call creates a new stack frame to store local variables and execution context.

43. What Are Recursive Generators?

Recursive generators yield values lazily using recursion, often used in Python.

44. What is Tree Recursion?

Tree recursion occurs when a function makes multiple recursive calls.

45. What Are Recursive Calls in Parallel Programming?

Some frameworks allow recursive calls to run in parallel to improve performance.

46. How to Trace Recursive Calls?

Use a debugger or print function parameters at each call to trace execution.

47. What Are Non-Trivial Recursion Problems?

- Solving Sudoku.\n

- Parsing mathematical expressions.

48. What Are the Risks of Recursion?

- Stack overflow.\n

- Performance overhead.

49. What Are Alternatives to Recursion?

- Iterative solutions.\n

- Divide-and-conquer using explicit stacks.

50. Why is Recursion Important in Algorithms?

Recursion simplifies complex problems, making code easier to understand for hierarchical or nested structures.